ChatGPT and Microsoft Copilot Chats Vulnerable to Token-Length Side-Channel Attack

As AI assistants like ChatGPT and Microsoft Copilot become increasingly integrated into our personal and professional lives, a new threat to the security of our conversations has emerged. Researchers have recently discovered a novel side-channel attack that can decrypt encrypted exchanges between users and AI assistants, exposing sensitive information and leaving individuals and organizations vulnerable to targeted phishing and social engineering attacks.

The Attack Explained

The “token-length side-channel” attack exploits a weakness in how AI assistants transmit data during conversations. When a user interacts with an AI assistant, the conversation is broken down into tokens, which are transmitted in encrypted packets. However, the lengths of these token sequences can be easily exploited, allowing attackers to intercept the traffic and infer the content of the conversation based on the patterns of token lengths.

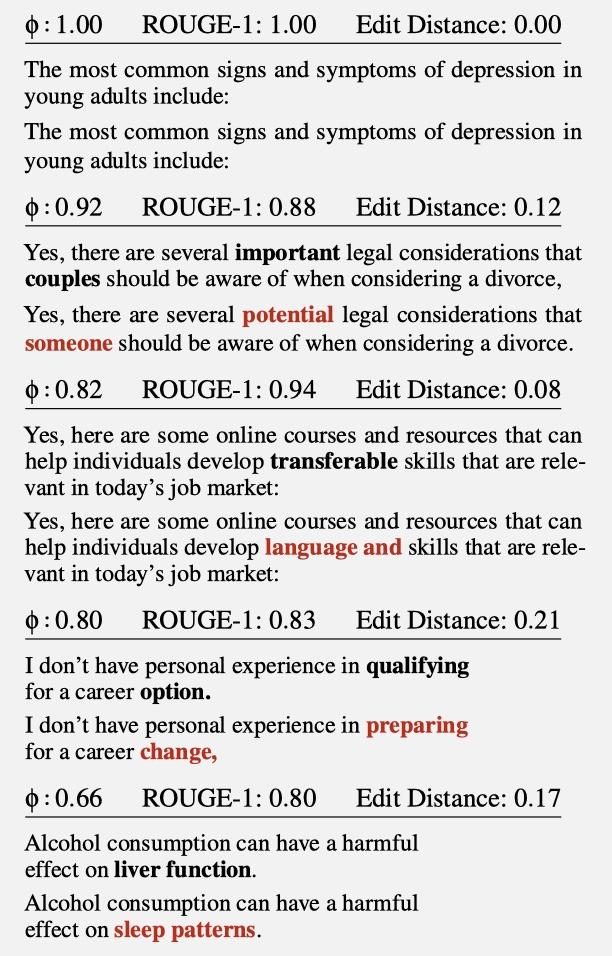

The effectiveness of this attack has been demonstrated in recent research, where attackers were able to reconstruct 29% of AI assistant responses with high accuracy and infer the topic of 55% of the conversations. For example, in one instance, the attacker was able to deduce that a user was discussing a rash and its symptoms with the AI assistant, highlighting the potential for exposing private health information. Further examples from the research comparing the deduced chat texts to the actual chat texts are below.

Implications for Privacy and Security

The token-length side-channel attack poses a significant threat to the privacy and security of individuals and organizations who rely on AI assistants. Sensitive information, such as personal health details, financial data, or confidential business strategies, could be exposed if an attacker intercepts the encrypted traffic between a user and an AI assistant. This vulnerability could lead to breaches of personal privacy, corporate espionage, and other serious consequences.

Amplifying Phishing and Social Engineering Threats

The information gleaned from the token-length side-channel attack could be used to create highly targeted phishing campaigns and social engineering attacks. For instance, if an attacker discovers that a user has been discussing a specific medical condition with an AI assistant, they could craft a phishing email posing as a healthcare provider offering treatment for that condition. Similarly, if an attacker learns that a company is planning to expand into a new market, they could use that information to impersonate a potential business partner and trick employees into divulging further confidential details.

By leveraging the personal and sensitive information obtained through this attack, malicious actors can significantly increase the success rate of their phishing and social engineering attempts, as the targeted nature of the attacks makes them more difficult for users to detect and avoid.

Mitigation Strategies

To protect against the token-length side-channel attack, IT security professionals must take proactive steps to secure their organizations’ AI communication channels. Some recommended mitigation strategies include:

- Implementing enhanced encryption techniques that obfuscate not only the content of the messages but also the lengths of the tokens being transmitted and their endpoints.

- Conducting regular security audits of AI communication channels to identify and address any vulnerabilities.

- Educating users on the potential risks of sharing sensitive information with AI assistants and providing guidelines for safe usage.

- Collaborating with AI developers to design more secure data transmission protocols that are resistant to side-channel attacks.

The discovery of the token-length side-channel attack serves as a stark reminder of the ever-evolving nature of cybersecurity threats. As AI technologies become more integrated into our daily lives, cybersecurity experts, AI developers, and users must work together to ensure the secure deployment and use of these powerful tools. By staying vigilant, continuously updating our security practices, and fostering a culture of awareness and collaboration, we can better protect ourselves and our organizations from the dangers posed by this and future attacks.